Co-Creation Cycle #3 - Final Report

Org Design Through AI-Facilitated Deliberation

By Artem Zhiganov, Harry Eastham, Eugene Leventhal, Jamilya Kamalova

September 2025

Introduction

The third Co-Creation Cycle (CCC3) successfully employed Harmonica as “AI facilitation” platform to engage 40+ delegates in designing the DAO’s organizational structure. Over two weeks in July 2025, the cycle combined asynchronous AI dialogues with synchronous workshops to identify governance gaps, prioritize initiatives, and design council structures.

This report serves two primary purposes:

-

For Governance Practitioners: Providing a replicable model for AI-augmented deliberative processes in DAOs

-

For the Scroll Community: Documenting the process and outcomes for transparency and future iteration

Following the success of CCC1 and CCC2, Scroll DAO’s third Co-Creation Cycle marked an evolution in participatory governance design. This cycle pioneered the use of AI-facilitated deliberation to address what the researchers from Metagov identified as the fundamental challenge of online governance: managing participant attention across complex decision spaces while maintaining depth and quality of engagement.

Key outcomes included clear prioritization of six domains (Education, Strategy, Branding & Marketing, Treasury Management, Operations, and Accountability), actionable recommendations for council formation, and a validated framework for future co-creation cycles.

This report documents not just what was accomplished, but how AI augmentation can enhance rather than replace human deliberation in governance contexts. It provides both a case study for other DAOs and a foundation for Scroll’s continued governance evolution.

Background

The Challenge

As Scroll DAO matured through 2025, three interconnected challenges emerged:

-

Organizational Clarity: Unclear mapping of responsibilities across the ecosystem (DAO, Labs, Foundation)

-

Scalable Participation: Need to engage 40+ delegates meaningfully without overwhelming their attention

-

Decision Complexity: Multi-faceted organizational design requiring both breadth and depth of exploration

These challenges reflect what Metagov researchers called the “governance scalability problem”—the tension between inclusive participation and the finite attention resource of participants.

Why Use AI to Facilitate Deliberation?

Traditional governance approaches face an inherent trade-off: sync workshops provide rich interaction but limit participation, while async forums enable broad participation but often lack depth. Harmonica’s approach addresses this through what we term “scalable depth”—using AI to facilitate personalized, in-depth dialogues that can occur asynchronously while maintaining conversational coherence.

The Harmonica Platform

Core Capabilities

Harmonica represents a new category of governance tooling that combines:

-

Async Conversation

-

Personalized 1:1 dialogues between participants and AI

-

Context-aware questioning that adapts to participant’s background and interests

-

Multi-language support (e.g. successfully elicited responses with Spanish-speaking delegates)

-

-

Intelligent Synthesis

-

Pattern recognition across dozens of conversations

-

Thematic clustering while preserving minority viewpoints

-

Evidence trails linking conclusions to specific participant quotes

-

-

Flexible Facilitation

-

Real-time system prompt adjustments based on emerging insights

-

Mid-session pivots when initial approaches prove suboptimal

-

Custom insight extraction through the AskAI feature

-

-

Interoperability

-

JSON export for processing in external LLMs and feeding results into whiteboards (e.g. Miro)

-

Pre-population of virtual whiteboards with synthesized statements

-

Continuous feedback loops between async and sync activities

-

Process Design

Overall Structure

CCC3 followed a deliberate “diverge-converge” methodology across two phases:

Week 1: Explore & Diverge (July 16-21)

├── Harmonica Session 1: "What should Scroll keep/stop/start doing?"

├── Synthesis and Analysis

└── Workshop 1: Translating ideas into domains

Week 2: Deliberate & Converge (July 22-28)

├── Harmonica Session 2: "How should we organize around domains?"

├── Synthesis and Pre-population

└── Workshop 2: Structure and metrics development

Post-Cycle: Synthesis (July 29 - August 8)

├── Proposals development by governance team

└── Report preparation

Key Design Principles

-

Async-First: Harmonica sessions preceded workshops, allowing participants to develop ideas independently before live group ideations

-

Progressive Refinement: Each activity built on previous outputs, creating clear “through lines”

-

Flexible Participation: Multiple entry points accommodated varying availability and attention budgets

-

Grounding in Evidence: All conclusions traceable to specific participant inputs

Session 1: Current Work and Opportunities

Objectives

Session 1 aimed to surface participants’ understanding of Scroll DAO’s current state and future opportunities without imposing predetermined frameworks. The conversational AI explored:

-

Perceptions of existing initiatives and their impact

-

Resource allocation effectiveness

-

Gaps in current activities

-

Opportunities for new work streams

Process

The session took place on July 16-19 and employed Harmonica’s adaptive questioning, with the AI facilitator:

-

Starting broad to understand each participant’s context and expertise

-

Progressively focusing on areas where participants showed deep knowledge

Mid-Session Adjustment

A unique strength of Harmonica demonstrated in Session 1 was the ability to adjust system prompts based on early responses. After initial conversations revealed that “what to stop” questions were premature for a young organization, facilitators:

-

Analyzed patterns using AskAI: “What are participants saying about things to stop doing?”

-

Identified the mismatch between question framing and organizational maturity

-

Updated prompts to emphasize “what to start” while maintaining conversational continuity

-

Achieved richer insights in subsequent dialogues

This flexibility would be impossible with traditional survey tools and demonstrates AI’s potential for responsive facilitation.

Results

Participation: 45 responses (36 completed, 80% completion rate)

Key Themes Emerged:

-

Local Nodes: Universal recognition as Scroll’s “unique edge” versus other L2s

-

Developer Ecosystem: Mentioned by more participants than governance, treasury, and marketing combined

-

Education: Identified as critical but requiring coordination with Labs team

-

Strategic Clarity: Consistent calls for clearer differentiation in the L2 space

Learnings from Session 1

What worked well

-

We had a few delegates talking to harmonica in Spanish, which should have allowed them to express their opinions more fully or clearly, synthesized insights were not translated but pre-populated the Miro board, and the workshops were in English

-

Harmonica’s flexibility enabled us to refocus the survey after a few responses revealed that we could get more relevant insights by refocusing on the survey. This included reducing the focus on what Scroll should stop doing (since it was still in it’s early stages as an org) and focus more on “what we should start doing”.

What needs improvement

-

Some delegates requested clearer estimates of how long sessions would last

-

No ranking/prioritization in Session 1 - everything was treated as equally important

-

No way for delegates to view the results post-session [if it’s not public]

Workshop 1: Desired Outcomes

Illustrative of Exercise 1

Illustrative of Exercise 2

Objectives

Workshop 1 aimed to bridge the gap between individual insights gathered in Session 1 and collective organizational design. The primary objectives were to:

-

Validate and refine the AI synthesis from Session 1

-

Identify gaps in coverage that async sessions might have missed

-

Transform dispersed ideas into coherent domains of responsibility

-

Establish initial prioritization to guide Session 2 exploration

-

Create shared understanding of desired outcomes before diving into implementation details

Process

The workshop consisted of two identical 60-minute sessions scheduled to accommodate delegates from different time zones.

The process began with participants reviewing the results from Session 1, i.e. Miro board pre-populated with AI-synthesized insights organized thematically—this validation phase generated lively discussion as delegates confirmed the accuracy of the synthesis while adding nuanced context the AI had missed.

Next, participants identified missing areas through a group brainstorming exercise using digital stickies, which proved highly productive as delegates surfaced initiatives and concerns that hadn’t emerged in individual conversations, followed by a dot-voting exercise that quickly revealed collective priorities though some struggled with the Miro interface.

The workshop then shifted to identifying desired outcomes for the DAO, with participants dividing into breakout rooms—while intended to enable focused discussion, the breakout format created some coordination challenges as groups interpreted the exercise differently. Deep-dive discussions in these smaller groups explored priority areas, generating rich detail about specific initiatives though conversations sometimes diverged from the intended focus on outcomes to implementation details.

The session concluded with prioritization voting on potential domains, which successfully established clear categories but revealed the challenge of comparing fundamentally different types of work within a single framework.

Results

Domains Identified: 7 distinct areas emerged from the clustering exercise:

-

Strategy & Vision Development

-

Branding & Marketing

-

Treasury Management

-

Education & Onboarding

-

Operations

-

Accountability & Oversight

-

Cross-Council Coordination

Additional Context: These 7 new domains would need to integrate with 3 existing priority areas (Ecosystem Growth, Global Community, Governance Iterations), creating a total of 10 potential work streams.

Geographic Priorities Surfaced: Strong emphasis on local nodes in Mexico, Brazil, and Kenya

Documented Outputs: 45 unique insights captured, 127 individual sticky notes created, 8 areas of clear consensus identified

Findings

The workshop revealed several critical insights about DAO’s organizational needs:

Convergent Themes: Participants consistently identified education, strategy, and treasury management as foundational requirements. The convergence happened organically, suggesting these represent genuine community priorities rather than facilitated outcomes.

Tension Points: Clear disagreements emerged around developer education’s “long-term conversion” challenge—some viewed it as essential infrastructure while others questioned ROI. These productive tensions helped identify areas needing deeper exploration in Session 2.

Local vs. Global Dynamic: Participants articulated sophisticated understanding of how local initiatives (particularly in Latin America and Africa) could strengthen global positioning, moving beyond simple geographic distribution to strategic regional advantage.

Existing Work Awareness Gaps: Discussions revealed significant variation in delegates’ knowledge of current initiatives, suggesting communication challenges between Labs, Foundation, and DAO activities.

Learnings from Workshop 1

What Worked Well

-

Delegates efficiently translated abstract ideas into seven concrete, actionable domains. The clustering process benefited from AI pre-organization while allowing human insight to refine boundaries and relationships.

-

Discussions produced specific, implementable initiatives rather than remaining at strategy level. For example, education discussions included specific curriculum components, target audiences, and success metrics.

-

The workshop surfaced the importance of local focus, with participants articulating how regional strategies in Mexico, Brazil, and Kenya could differentiate Scroll from other L2s. This wasn’t just about presence but about building culturally-adapted governance models.

-

Disagreements were surfaced constructively, particularly around resource allocation and long-term versus short-term priorities. These tensions provided valuable input for governance team decision-making.

-

The Miro board format enabled participants to see patterns emerging in real-time, creating “aha moments” as disparate ideas connected visually.

What Needs Improvement

-

The emergence of 7 new domains plus 3 existing ones created an overwhelming scope of 10 potential work streams. This breadth made meaningful prioritization nearly impossible within workshop constraints. Participants struggled to compare fundamentally different domains (e.g., how to weigh Treasury Management against Education?), leading to surface-level treatment of complex topics. The lack of resource constraints in discussions meant participants could advocate for everything without confronting trade-offs.

-

Without clear criteria for evaluation, voting became popularity contests rather than strategic assessment. The workshop needed explicit discussion of resource limitations, implementation capacity, and strategic dependencies. Future workshops should introduce forcing functions early—for example, “if we could only fund three domains” or “with 100 points to distribute.”

-

Some breakout groups spent excessive time on single topics while others rushed through multiple domains. The lack of visible timers meant facilitators couldn’t intervene effectively. Recommendations for improvement include:

-

Strict 10-15 minute timebox per domain exploration

-

Visible countdown timers for all participants

-

Facilitator prompts at 5-minute intervals

-

Maximum 2-3 domains per group to ensure depth

-

-

The workshop attempted comprehensive coverage at the expense of deep exploration. Participants touched on all domains but couldn’t adequately explore implementation requirements, resource needs, or success criteria for any single area. Future design should embrace incompleteness—better to thoroughly explore 3 domains than superficially cover 10.

-

Small groups interpreted exercises in break out rooms differently, creating synthesis challenges. Some focused on “what” (specific initiatives) while others explored “why” (strategic rationale). This misalignment wasn’t discovered until full-group report-backs, too late for correction. Standardized templates and clearer instructions could improve consistency while preserving creative exploration. Additionally, coordinating a group of facilitators for the breakouts ahead of time, thus ensuring coordinated approaches, could also address this issue.

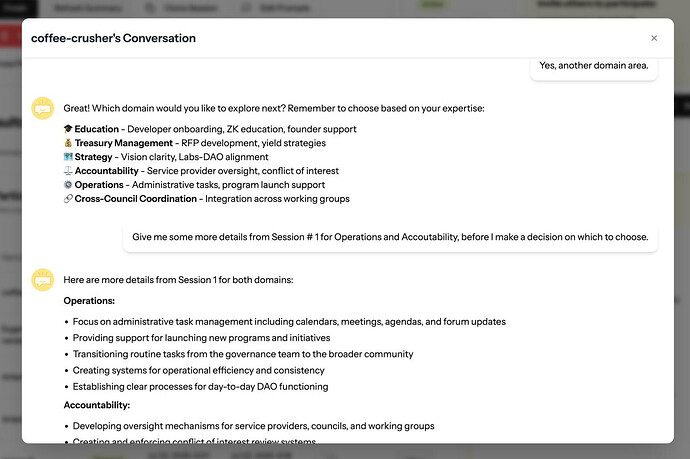

Session 2: Domain Exploration & Prioritization

Objectives

The goal of Session 2 was to explore the domains identified in Workshop 1. The session was designed to move from the “what should we do” question of Session 1 to the “how should we organize to do it” question, providing the governance team with structured input on which workstreams to prioritize and pre-populate the Miro board for Workshop 2.

We did a conversational survey which investigated the pre-selected domains [Education, Branding & Marketing, Treasury Management, Strategy, Accountability, Operations, Cross-Council Coordination] one by one, as many as participants wanted to explore, using the same structure:

-

Strategic priorities: What should be Scroll DAO’s specific focus areas over the next 6 months?

-

Key challenges: What are the biggest barriers to success and how should we approach them?

-

Top initiative: If we could only fund one major initiative this year, what should it be?

In the end participants were asked: What are the top 3 domains you most want to see Scroll DAO prioritize and fund over the next 6 months?

Process

Session 2 took place on July 22-25, and showcased Harmonica’s capability for structured yet flexible exploration. The system prompts were engineered to:

-

Allow Expertise-Based Selection: Participants chose domains where they had most context

-

Maintain Consistent Depth: Three core questions per domain ensured comparability:

-

What should be the DAO’s specific priorities for the next 6 months?

-

What are the biggest challenges/barriers and how should we approach them?

-

If we could fund only one initiative, what should it be?

-

-

Enable Natural Exits: Participants could explore domains 1-by-1 at their own pace

-

End with Prioritization: Final ranking of top 3 domains for resource allocation

Post-session, the facilitators used Harmonica’s AskAI feature to extract specific insights, for example:

-

“What specific concerns do delegates have about treasury management structure?”

-

“How do participants see the relationship between strategy and branding?”

-

“What metrics did participants suggest for accountability?”

This capability proved invaluable for workshop preparation, allowing facilitators to enter with nuanced understanding of delegates’ perspectives without reading all transcripts.

Another useful feature was exporting to responses to JSON - it allowed us to feed raw responses into Claude a few times when AskAI (also powered by Claude) didn’t cut it.

Results

Metrics:

-

37 responses (28 completed, 75.7% completion rate)

-

Average of 2.3 domains explored per participant

-

89% of participants provided detailed responses (>50 words per question)

Domain Prioritization Results:

| Rank | Domain | First Priority | Second Priority | Third Priority | Total Weight |

|---|---|---|---|---|---|

| 1 | Education | 20 | 8 | 5 | 81 |

| 2 | Strategy | 15 | 12 | 8 | 77 |

| 3 | Branding & Marketing | 10 | 15 | 10 | 70 |

| 4 | Treasury Management | 8 | 10 | 12 | 56 |

| 5 | Operations | 5 | 8 | 15 | 46 |

| 6 | Accountability | 3 | 5 | 10 | 29 |

Findings:

-

Strong emphasis on practicality: Delegates stressed hands-on approaches over abstract theory across all domains

-

Need for clear differentiation: Consistent calls for defining Scroll’s unique value proposition in the crowded L2 space

-

Education as growth engine: Strong consensus that developer education and founder support are critical for long-term success

-

Streamlined processes: Desire for reduced bureaucracy and clearer communication between DAO, Labs, and community

-

Community-driven approaches: Local nodes and regional initiatives seen as valuable for growth and brand building

-

Financial sustainability concerns: Multiple delegates emphasized need for professional treasury management and diversification

Learnings from Session 2

What worked well

-

As this phase focused on ensuring delegates could provide deeper input into areas they knew about, we set up Harmonica to cover the domains they wanted to discuss - around half of participants explored more than one domain.

-

One delegate didn’t have enough context to answer the question, and Harmonica used the knowledge (results of Workshop 1) to provide relevant context and make it easier to respond. In a more static format (e.g. a Google Form) this would have blocked them from completing it and they would have to abandon the session or switch to other tab to find the information.

-

A few delegates talked to harmonica in Spanish, which allowed them to express their opinions more fully or clearly, synthesized insights were not translated but pre-populated the Miro board, and the workshops were in English

-

Post-session analysis using targeted AskAI queries revealed patterns invisible in the default summary. For instance, querying “What concerns do participants have about accountability structures?” surfaced consistent worry about “accountability theater”, i.e. appearing rigorous without actual oversight.

What needs improvement

-

Initial domain groupings remained broad throughout, with Strategy overlapping significantly with Branding/Marketing and inconsistent category structures across domains. This points to an opportunity for developing clearer taxonomies that evolve iteratively as patterns emerge.

-

The richness of responses created synthesis challenges. With 203 unique insights across 6 domains, identifying patterns while preserving minority views (or amplifying outliers) proved difficult. The AI’s initial synthesis required significant human review and restructuring for workshop use.

-

Participants provided their richest insights when focusing on 2 domains, with responses maintaining highest quality at this depth. We should manage the expectations of participants in terms of time commitment or answers depth.

Quote from one of the delegates:

The platform is really good! When I interact with the Public Forum, I always measure my words. But this interface is really cozy. It feels like ChatGPT. I can be more assertive and honest, haha. I mean, we are always honest as a team. Still, sometimes we try to be polite with other DAO members, and sometimes you have to prioritize being polite instead of critical. This is the first time I’m writing with more subjectivity. I don’t have to consider the DAO members’ susceptibility as an organization and the subsequent political tensions in the Latam region. You can be direct and brief. We have language barriers and culture barriers. AI could be a neutral field in which to express opinions.

Workshop 2: Priorities → Structure → Metrics

Objectives

Our goals with the second workshop were to:

-

Present findings from Harmonica Session 2

-

Discuss domains, structures, metrics and next steps

Process

The workshop consisted of two identical 60-minute sessions scheduled to accommodate delegates from different time zones. The process began with participants reviewing the results from Session 2…

Harmonica’s built-in synthesis and export to JSON functionality allowed us to prepare:

-

Each domain frame included priorities, challenges, and suggested initiatives

-

Color coding distinguished high-consensus from contested items

-

Direct quotes provided evidence for key points

-

AskAI insights supplied facilitators with talking points

Results

Through focused discussion informed by Harmonica insights:

Treasury Management: RFP approach rather than permanent council

-

Professional service providers selected competitively

-

Accountability council oversight rather than dedicated treasury council

Strategy Council: Internal focus with external representation

-

4 Scroll members + 1 external representative

-

External member as liaison with other councils

Education: Deferred pending Labs coordination

-

Avoid duplication with existing initiatives

-

Leverage Local Nodes for distribution

Sequencing: Strategy must precede Branding

-

Cannot effectively market without clear positioning

-

Dependencies mapped across all domains

Learnings from Workshop 2

What Worked Well

-

Harry pre-populated the whiteboard with challenges, initiatives, and strategic priorities — Session 2 results provided solid starting points

-

Moving from treasury management (clearest path) to strategy/branding (more complex) worked well

-

Short, focused bursts (3-5 minutes) kept discussions moving and prevented over-analysis

-

When education emerged as top priority but needed coordination with Labs, Eugene made smart decision to defer rather than force premature decision

-

Themes naturally connected across domains (strategy informing branding, accountability overseeing treasury)

What Needs Improvement

-

Music/audio issues disrupted flow multiple times & Miro board editing access wasn’t properly configured initially

-

Operations and accountability got minimal time despite being high priorities

-

Same 4-5 people provided most verbal input (Eugene acknowledged this pattern from Workshop 1)

-

The workshop revealed a key insight: many domains have dependencies that weren’t initially clear:

-

Strategy must precede branding - Can’t market unclear positioning

-

Education needs Labs coordination - Risk of duplication without alignment

-

Treasury needs accountability structure - Oversight mechanism required

-

-

Difficulty distinguishing DAO work from Foundation/Labs responsibilities

-

Some delegates felt the distinction between councils and working groups wasn’t clear enough

The workshop revealed that the co-creation process itself was maturing - moving from broad ideation to concrete roles and metrics. However, this transition exposed the need for different facilitation approaches as the work became more complex and interdependent.

The most successful moments came when the group could make clear, bounded decisions (treasury RFP approach, education deferral) rather than trying to solve everything at once. This suggests future workshops might benefit from even tighter scope and clearer decision criteria.

Quote from one of the delegates:

Yeah it was a great session, I was positively surprised how many participated. So thanks for organizing it so well also beforehand. And overall really structured and clear, especially the Miro board.

A couple of things I noticed:

Time left for exercises was sometimes not clear. Maybe use the timer and extend the time there - also in the 15 min breakout session, have the timer running (I believe it wasn’t?)

For the dot voting, maybe use the voting feature that Miro also provides (clear rules what to vote on, how many can votes each person has, etc.) - and/or have colour coding as a legend somewhere when the different colour mean different things

the Harmonica session already prepared the session well giving some context, and ideas/topics to focus on. I think that saved a lot of the time in the workshop itself

Content wise I think it was a good first step, and I am excited how we progress. Hope to get tasks/outcomes to act on practically out of the process at the end

[continued in the comment below]